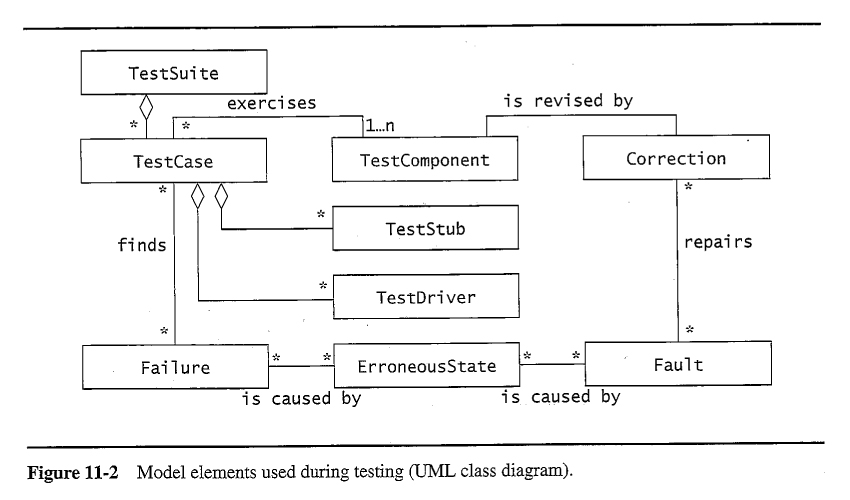

11.3.1 - Faults, Erroneous States, and Failures

11.3.2 - Test Cases

11.3.3 - Test Stubs and Drivers

- Test Stubs simulate the behavior of units called by the unit being tested.

- Test Drivers simulate the behavior of units that call the unit being tested.

11.3.4 - Corrections

Correcting one fault can often introduce new faults. The following techniques can help reduce this problem:

- Problem Tracking involves keeping track of what faults have been found, the actions taken to correct them, what unit(s) were affected by the corrections, etc. This is covered in further detail in Chapter 13 on configuration management.

- Regression Testing involves the re-execution of previous tests following a change, to identify any new faults that may have been introduced as part of the correction.

- Rationale Maintenance documents the rationale for any changes as well as the rationale for the original design and implementation. Having this knowledge decreases the number of new faults introduced in the process of correcting other faults.

11.4.1 - Component Inspection

- Inspections involve rigorous procedures for examining artifacts ( code, documents, design models, etc. ) for faults.

- Many studies have found that inspections are more effective at finding faults than testing. ( However neither is sufficient by itself. )

- Formal inspection procedures often involve inspection teams consisting of 3 to 4 members, generally not including the developer who produced the artifact, ( other than as a "witness", allowed to speak only when asked a direct question. )

- Inspections compare the specifications against the artifacts.

- Inspections often rely upon standardized checklists of items to inspect, and standards quality documents describing how things are supposed to be done.

- One benefit of inspections is that they can be applied to unfinished code and other artifacts that cannot be "executed" for testing purposes.

- Fagan's method consists of 5 steps:

- Overview

- Preparation

- Inspection Meeting

- Rework

- Follow-up

- Approaches differ in the amount of work done during the preparation phase versus the meeting phase.

- This book does not cover inspections to the extent that most software engineering texts do.

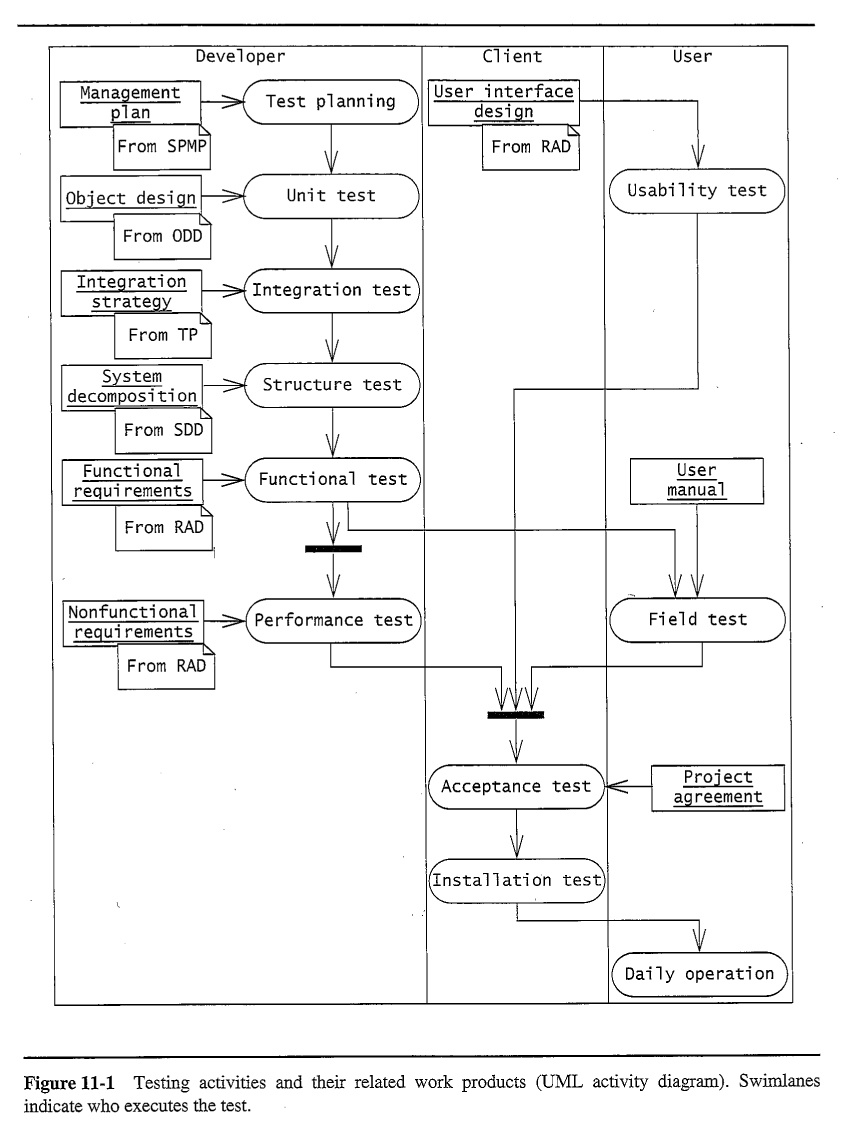

11.4.2 - Usability Testing

- Usability testing is concerned with two factors:

- The user interface, including how efficiently users can accomplish tasks, how quickly they learn to use the system, and the rate at which they make mistakes.

- Agreement between what the user expects the system to do and what it actually does. ( Validation. )

- Three types of usability tests:

- Scenario test, in which users are asked to act through a particular scenario using a prototype of the system. This is often done using paper mock-ups very early in the development process.

- Prototype test, in which a ( software ) prototype of the system is used.

- Horizontal prototypes implement a single layer in the system, such as the user interface layer.

- Vertical prototypes implement all the functionality required to execute a particular use-case.

- A Wizard of Oz prototype incorporates a human to provide the system response behind the prototyped user interface.

- Product test, in which the completed product is evaluated by a small group of test subjects.

- Alpha test is conducted in-house, with carefully selected test subjects, often company employees.

- Beta test is conducted by real customers at their sites. Beta tests may or may not be restricted to carefully selected subjects.

11.4.3 - Unit Testing

Testing of individual units, such as a class or even an individual method.

- White box testing takes advantage of knowledge of the internal structure of the unit being tested.

- Black box testing is designed solely based on the specifications of the unit, without any knowledge of its internal workings.

Equivalence testing

Defining ranges of variable input values for which all tests are expected to yield equivalent results, i.e. negative numbers, zero, positive integers, numbers larger than 32768, etc.

Boundary testing

Values on the "edges" or boundaries of equivalence ranges are most likely to cause faults. For example, leap years have special rules for years divisible by 100 or 400. ( Empty strings are often taken as a special or boundary case. )

Path testing

Ensure that every path through the flow chart is followed by at least one test case.

State-based testing

- Ensure that every state transition is tested by at least one test case.

- May require knowing and setting the internal state of objects, which poses a problem in the face of information hiding and encapsulation.

Polymorphism testing

All possible bindings need to be tested for each method that can be sent. ( And sometimes all combinations of bindings. )

Sample Unit Testing

The followng are sample solutions to old exam questions on testing. They are somewhat more complete that what is really expected of students taking an exam, but more appropriate for inclusion in a report.

11.4.4 - Integration Testing

After units are tested individually, then combinations of units must be tested, ( focusing primarily on the interfaces between the units. )

Horizontal integration testing strategies

- Assumes that the architecture has a layered structure.

- The big bang approach tests the entire system once the unit tests are complete.

- Bottom-up testing first integrates units at the bottom level of the hierarchy, and works up.

- Top-down testing integrates from the top level first, working down.

- Sandwich Testing combines bottom-up and top-down testing. First the top and bottom layers are unit tested, and then they are integrated with the middle or "target" layer in parallel. Target layer units are not individually unit tested.

- Modified Sandwich Testing unit tests all layers before commencing integration testing.

Vertical integration testing strategies

Focus on early testing and integration of all units necessary to provide partial functionality of the product.

11.4.5 - System Testing

Functional testing

Tests functional requirements, as outlined in use-cases.

Performance testing

Tests non-functional requirements, as outlined in the requirements documents:

- Stress testing tests the system under heavy loads.

- Volume testing tests the system with large volumes of data, such as large files or databases.

- Security testing, often involves tiger teams.

- Timing testing tests timing constraints.

- Recovery testing tests the systems ability to recover from erroneous states.

Pilot testing

Testing done in the field by a select group of test subjects, e.g. alpha and beta tests.

Acceptance testing

Testing performed by the client, in the development environment.

Installation testing

Testing performed after the system has been installed at the client's site.

11.5.1 - Planning Testing

11.5.2 - Documenting Testing

Four types of testing documents:

- Test Plans cover most of the managerial aspects, such as testing schedules, budgets, and the allocation and scheduling of necessary resources.

- Test Specifications document the specific details of individual tests. Details include input data, output oracles, pass/fail criterion, time required, location of relevant files, associated requirements, and references to other documents as appropriate.

- Test Incident Reports document a specific execution of a specific test. Includes who conducted the test, when the test was done, and the results of the tests.

- Test Report Summary documents all of the failures found by all of the tests.

11.5.3 - Assigning Responsibilities

- Testing is best performed by someone other than the developer who developed the artifact being tested, particularly beyond the unit testing level.

- Some organizations have entire testing teams / departments.

- Incentives must be provided for finding faults, without penalizing those who produced them.

11.5.4 - Regression Testing

- Any changes to the system, such as fault corrections,design changes, or the introduction of new features or functionality, may cause the introduction of new faults into units that had already been tested.

- Re-testing of affected units is important, but it is generally not possible to re-test every affected unit every time a change is made.

- Selection of units to be re-tested may follow the following guidelines:

- Retest dependent units. Subsystems that depend on a changed unit are most likely to break after the changes are made.

- Retest risky use cases, i.e. the ones most likely to cause problems and the ones with the largest potential risk of damages.

- Retest frequent use cases. Those cases most frequently executed are most likely to encounter a problem eventually.

11.5.5 - Automating Testing

11.5.6 - Model-Based Testing

The following material is excerpted from "Software Testing and Analysis - Process, Principles, and Techniques", by Pezze and Young. It is a required textbook when I teach CS 442, Software Engineering II.

The following material is excerpted from "Software Engineering 8", by Ian Sommerville. It is an alternate textbook that has been used in CS 440 in past semesters.

Sommerville's inspection process involves two meetings - One to get an overview understanding of the artifact, presented by the author, and another to review the findings of the inspection process:

Like Pezze, Sommerville breaks inspection checklists down into categories:

The format of Sommerville's test plan document is similar to our author's: